Automatic Movie Content Analysis

The MoCA Project

The MoCA Project

MoCA Project:

Analysis and Retargeting of Ball Sports Video

The quality achieved by simply scaling a sports video to the

limited display resolution of a mobile device is often insufficient. As

a consequence, small details like the ball or lines on the playing

field become unrecognizable. We present a novel approach to analyzing

court-based ball sports videos. We have developed new techniques to

distinguish actual playing frames, to detect players, and to track the

ball. This information is used for advanced video retargeting, which

emphasizes essential content in the adapted videos.

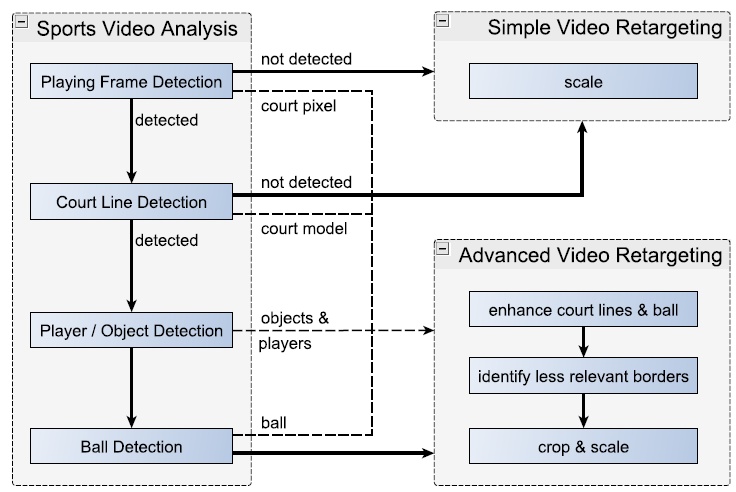

System overview

Our sports video analysis and adaptation system is based on four

modules which analyze a video and one additional module for video

retargeting (see the following figure). In a first step, the system

distinguishes playing frames from other frames. Additional modules

detect court lines, players, objects, and the ball. For each frame, the

components are run one after another. Only if a frame is processed

successfully in the current step, it is forwarded to the next module.

So, for example, if the court field is not detected in a frame,

algorithms to locate court lines or players are skipped. Previously

computed data is re-used wherever possible.

The adaptation of a frame depends on the results of the analysis. A

frame is scaled if no semantic information could be derived. Otherwise,

the advanced video retargeting module uses all available information to

adapt a frame.

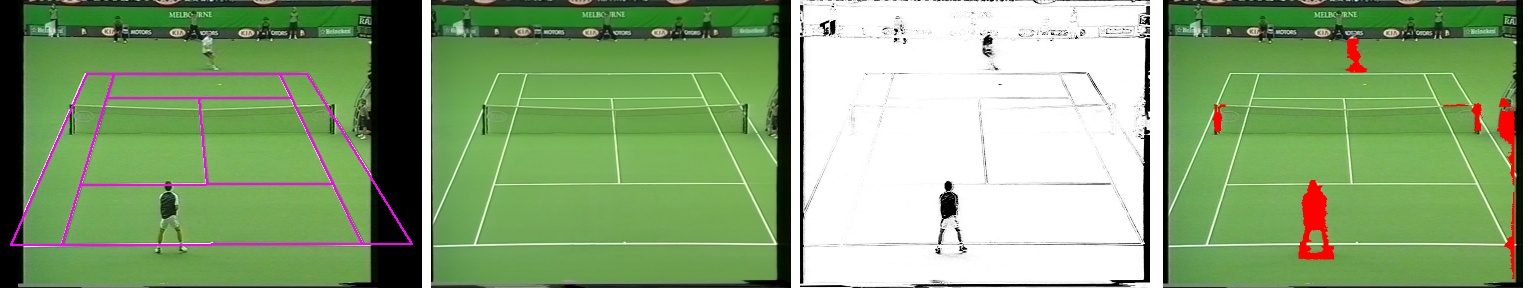

The following figure shows exemplary intermediate results of the

processing steps. From left to right: court model, background image,

difference image, player and object segmentation.

Additional material

| Sports video adaptation results |

|

|||

Download video Download presentation Download paper |